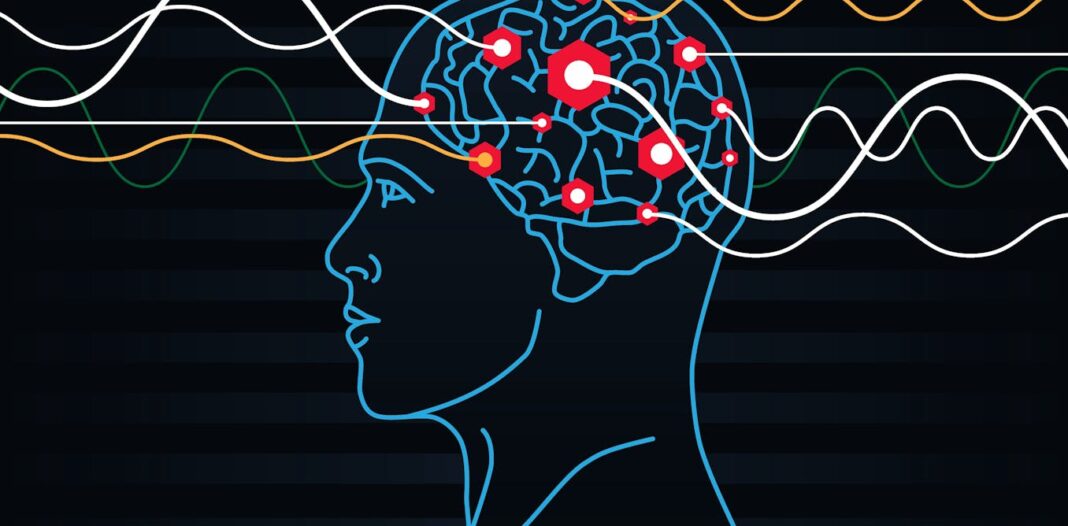

Earlier this 12 months, Neuralink implanted a chip contained in the brain of 29-year-old US man Noland Arbaugh, who’s paralysed from the shoulders down. The chip has enabled Arbaugh to maneuver a mouse pointer on a screen just by imagining it moving.

In May 2023, US researchers also announced a non-invasive strategy to “decode” the words someone is pondering from brain scans together with generative AI. An identical project sparked headlines a few “mind-reading AI hat”.

Can neural implants and generative AI really “read minds”? Is the day coming when computers can spit out accurate real-time transcripts of our thoughts for anyone to read?

Such technology might need some advantages – particularly for advertisers on the lookout for recent sources of customer targeting data – but it surely would demolish the last bastion of privacy: the seclusion of our own minds. Before we panic, though, we should always stop to ask: is what neural implants and generative AI can do “reading minds”?

The brain and the mind

As far as we all know, conscious experience arises from the activity of the brain. This means any conscious mental state must have what philosophers and cognitive scientists call a “neural correlate”: a selected pattern of nerve cells (neurons) firing within the brain.

So, for every conscious mental state you possibly can be in – whether it’s interested by the Roman Empire, or imagining a cursor moving – there may be some corresponding pattern of activity in your brain.

So, clearly, if a tool can track our brain states, it should give you the option to easily read our minds. Right?

Well, for real-time AI-powered mind-reading to be possible, we’d like to give you the option to discover precise, one-to-one correspondences between particular conscious mental states and brain states. And this will likely not be possible.

Rough matches

To read a mind from brain activity, one must know precisely which brain states correspond to particular mental states. This means, for instance, one needs to tell apart the brain states that correspond to seeing a red rose from those that correspond to smelling a red rose, or touching a red rose, or imagining a red rose, or pondering that red roses are your mother’s favourite.

One must also distinguish all of those brain states from the brain states that correspond to seeing, smelling, touching, imagining or interested by another thing, like a ripe lemon. And so on, for the whole lot else you possibly can perceive, imagine or have thoughts about.

To say that is difficult could be an understatement.

Take face perception for example. The conscious perception of a face involves all forms of neural activity.

But an incredible deal of this activity seems to relate to processes that come before or after the conscious perception of the face – things like working memory, selective attention, self-monitoring, task planning and reporting.

Winnowing out those neural processes which might be solely and specifically chargeable for the conscious perception of a face is a herculean task, and one which current neuroscience shouldn’t be near solving.

Even if this task were completed, neuroscientists would still only have found the neural correlates of a certain style of conscious experience: namely, the overall experience of a face. They wouldn’t thereby have found the neural correlates of the experiences of particular faces.

So, even when astonishing advances were to occur in neuroscience, the would-be mind-reader still wouldn’t necessarily give you the option to inform from a brain scan whether you might be seeing Barack Obama, your mother, or a face you don’t recognise.

That wouldn’t be much to put in writing home about, so far as mind-reading is worried.

But what about AI?

But don’t recent headlines involving neural implants and AI show some mental states might be read, like imagining cursors move and interesting in inner speech?

Not necessarily. Take the neural implants first.

Neural implants are typically designed to assist a patient perform a selected task: moving a cursor on a screen, for instance. To do this, they don’t must give you the option to discover precisely the neural processes which might be correlated with the intention to maneuver the cursor. They just have to get an approximate fix on the neural processes that are likely to go together with those intentions, a few of which could actually be underpinning other, related mental acts like task-planning, memory and so forth.

Thus, although the success of neural implants is definitely impressive – and future implants are more likely to collect more detailed information about brain activity – it doesn’t show that precise one-to-one mappings between particular mental states and particular brain states have been identified. And so, it doesn’t make real mind-reading any more likely.

Maxim Gaigul / Shutterstock

Now take the “decoding” of inner speech by a system comprised of a non-invasive brain scan plus generative AI, as reported in this study. This system was designed to “decode” the contents of continuous narratives from brain scans, when participants were either listening to podcasts, reciting stories of their heads, or watching movies. The system isn’t very accurate – but still, the actual fact it did higher than random probability at predicting these mental contents is seriously impressive.

So, let’s imagine the system could predict continuous narratives from brain scans with total accuracy. Like the neural implant, the system would only be optimised for that task: it wouldn’t be effective at tracking every other mental activity.

How much mental activity could this technique monitor? That depends: what quantity of our mental lives consists of imagining, perceiving or otherwise interested by continuous, well-formed narratives that might be expressed in straightforward language?

Not much.

Our mental lives are flickering, lightning-fast, multiple-stream affairs, involving real-time percepts, memories, expectations and imaginings, abruptly. It’s hard to see how a transcript produced by even probably the most fine-tuned brain scanner, coupled to the neatest AI, could capture all of that faithfully.

The way forward for mind reading

In the past few years, AI development has shown a bent to vault over seemingly insurmountable hurdles. So it’s unwise to rule out the potential for AI-powered mind-reading entirely.

But given the complexity of our mental lives, and the way little we all know in regards to the brain – neuroscience continues to be in its infancy, in spite of everything – confident predictions about AI-powered mind-reading ought to be taken with a grain of salt.