Are you lying? Do you have got a racial bias? Is your moral compass intact?

To discover what you’re thinking that or feel, we often need to take your word for it. But questionnaires and other explicit measures to disclose what’s in your mind are imperfect: you could decide to hide your true beliefs or you could not even concentrate on them.

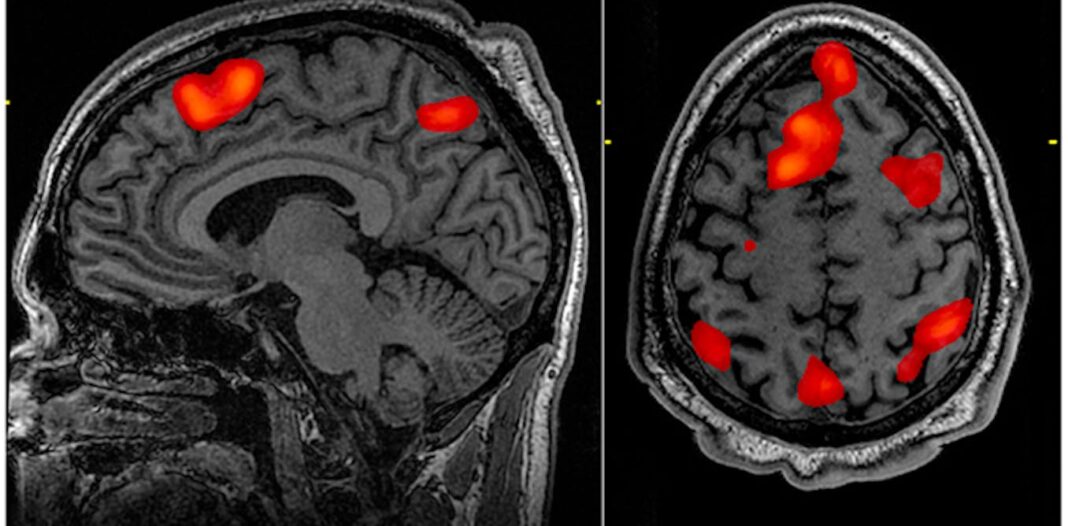

But now there’s a technology that allows us to “read the mind” with growing accuracy: functional magnetic resonance imaging (fMRI). It measures brain activity not directly by tracking changes in blood flow – making it possible for neuroscientists to look at the brain in motion. Because the technology is protected and effective, fMRI has revolutionised our understanding of the human brain. It has make clear areas essential for speech, movement, memory and plenty of other processes.

More recently, researchers have used fMRI for more elaborate purposes. One of probably the most remarkable studies comes from Jack Gallant’s lab on the University of California. His team showed movie trailers to their volunteers and managed to reconstruct these video clips based on the topics’ brain activity, using a machine learning algorithm.

In this approach, the pc developed a model based on the topic’s brain activity slightly than being fed a pre-programmed solution by the researchers. The model improved with practice and after getting access to enough data, it was in a position to decode brain activity. The reconstructed clips were blurry and the experiment involved prolonged training periods. But for the primary time, brain activity was decoded well enough to reconstruct such complex stimuli with impressive detail.

Enormous potential

So what could fMRI do in the long run? This is a subject we explore in our recent book Sex, Lies, and Brain Scans: How fMRI Reveals What Really Goes on in our Minds. One exciting area is lie detection. While early studies were mostly considering finding the brain areas involved in telling a lie, newer research tried to really use the technology as a lie detector.

As a subject in these studies, you’ll typically need to answer a series of questions. Some of your answers can be truthful, some can be lies. The computer model is told which of them are which to start with so it gets to know your “brain signature of lying” – the precise areas in your brain that light up if you lie, but not if you end up telling the reality.

Afterwards, the model has to categorise recent answers as truth or lies. The typical accuracy reported within the literature is around 90%meaning that nine out of ten times, the pc accurately classified answers as lies or truths. This is much better than traditional measures comparable to the polygraph, which is regarded as only about 70% accurate. Some firms have now licensed the lie detection algorithms. Their next big goal: getting fMRI-based lie detection admitted as evidence in court.

They have tried several times nowhowever the judges have ruled that the technology is just not ready for the legal setting – 90% accuracy sounds impressive, but would we would like to send anyone to prison if there’s a likelihood that they’re innocent? Even if we are able to make the technology more accurate, fMRI won’t ever be error proof. One particularly problematic topic is the one in all false memories. The scans can only reflect your beliefs, not necessarily reality. If you falsely imagine that you have got committed a criminal offense, fMRI can only confirm this belief. We is likely to be tempted to see brain scans as hard evidence, but they’re only pretty much as good as your individual memories: ultimately flawed.

wikipedia

Still, this raises some chilling questions on the chance for a “Big Brother” future where our innermost thoughts could be routinely monitored. But for now fMRI can’t be used covertly. You cannot walk through an airport scanner and be asked to step into an interrogation room, because your thoughts were alarming to the safety personnel.

Undergoing fMRI involves lying still in a giant noise tube for long periods of time. The computer model must get to know you and your characteristic brain activity before it might make any deductions. In many studies, which means subjects were being scanned for hours or in several sessions. There’s obviously no likelihood of doing this without your knowledge – and even against your will. If you probably did not want your brain activity to be read, you may simply move within the scanner. Even the slightest movements could make fMRI scans useless.

Although there is no such thing as a immediate danger of undercover scans, fMRI can still be used unethically. It may very well be utilized in business settings without appropriate guidelines. If academic researchers want to begin an fMRI study, they should undergo an intensive process, explaining the potential risks and advantages to an ethics committee. No such guidelines exist in business settings. Companies are free to purchase fMRI scanners and conduct experiments with any design. They could show you traumatising scenes. Or they may uncover thoughts that you just desired to keep to yourself. And in case your scan shows any medical abnormalities, they aren’t forced to inform you about it.

Mapping the brain in great detail enables us to look at sophisticated processes. Researchers are starting to unravel the brain circuits involved in self control and morality. Some of us should want to use this information to screen for criminals or detect racial biases. But we must take into account that fMRI has many limitations. It is just not a crystal ball. We might have the option to detect an implicit racial bias in you, but this cannot predict your behaviour in the actual world.

fMRI has an extended approach to go before we are able to use it to fireplace or incarcerate anyone. But neuroscience is a rapidly evolving field. With advances in clever technological and analytical developments comparable to machine learning, fMRI is likely to be ready for these futuristic applications earlier than we expect. Therefore, we’d like to have a public discussion about these technologies now. Should we screen for terrorists on the airport or hire only teachers and judges who don’t show evidence of a racial bias? Which applications are useful and helpful for our society, which of them are a step too far? It is time to make up our minds.